[This is an extended essay on the news out of Norway yesterday. See the closing for encouragement toward Opera and its fans, whatever the open source projects they choose to join, from me on behalf of Mozilla. /be]

Founder Flashback

I wrote about the founding of HTML5 in June, 2004, without dropping that acronym, mentioning only “Opera and others” as partners, because Apple was shy. Fragments of memory:

- @t was then working for Microsoft (he’s at Mozilla now, it’s great to have him) and sitting in front of me during the second day of the workshop.

- Vested interests touted XForms as inevitable (the UK Insurance Industry had standardized on it!).

- Browser vendors were criticized as everything from uncooperative to backward in resisting the XML Utopia.

- JavaScript took its lumps, including from Bert (whom I met years later at SXSW and got on fine with).

Hixie (pre-Google, already working on Web Forms 2) and Håkon of Opera joined David Hyatt of Apple, Mozilla’s @davidbaron and me at a San Jose pub afterward. There I uncorked and said something like “screw it, let’s do HTML5!”

We intended to do the work in the WHAT-WG. Håkon reminded us that the only likely path to Royalty-Free patent covenants remained the W3C, so we should aim to land specs there. He also correctly foresaw that Microsoft would not join our legal non-entity, rather would prefer the W3C.

So we drank a toast to HTML5.

Fast-Forward to 2013

In early January, I heard from an old friend, who wrote “Some major shift is happening at Opera, senior developers are being laid off, there’s a structural change to the company….” Other sources soon confirmed what became news yesterday: Opera was dropping Presto for WebKit (the chromium flavor).

One of my sources wrote about Mozilla and why it matters to him:

Smart people, and people I know and like, at that. Interesting work going on in [programming languages and operating systems]. Probably the last relatively independent web technology player (not something I cared about historically, but after leaving the Apple iOS fiefdom for the Google Android fiefdom, I find I’m just as locked in and feel just as monitored).

I hear this kind of comment more often lately. I think it’s signal, not noise.

Why Mozilla Matters

The January signal reminded me of mail I wrote to a colleague about “How Mozilla is different”:

- We innovate early, often, and in the open: both standards and source, draft spec and prototype implementation, playing fair and revising both to achieve consensus.

- Our global community cuts across search, device, social network businesses and agendas, and wants the end-to-end and intertwingled mix-and-match properties of the Web, even if people can’t always describe these technical properties precisely, or at all. What they want most is to not be “owned”. They seek user sovereignty.

- We restored browser competition by innovating ahead of standards in user-winning ways. We’re doing it again, and with allies pulling other platforms up: Samsung is patching WebKit to have the B2G-pioneered device APIs.

- Because we are relatively free of agenda, we can partner, federate, integrate horizontally instead of striving to capture users in yet another all-purpose vertical silo.

- We advance the vision of you owning your own experience and data, attached to your identity, with whatever services you choose integrated. Examples include the Social API and the new Firefox-sync project that outsources storage service via DropBox, Box, etc.

The last point is hugely important. It’s why we have had such good bizdev and partnering with Firefox OS. But it is also why we have a strongly trusted brand and community engagement: people expect us to be an independent voice, to fight the hard but necessary fight, to be willing to stand apart some times.

I see these as critical distinguishing factors. No competing outfit has all of these qualities.

Thoughts on WebKit

I read webkit-dev; some very smart people post there. Yet the full WebKit story is more complex and interesting than shallow “one WebKit” triumphalist commentary suggests.

First, there’s not just one WebKit. Web Developers dealing with Android 2.3 have learned this the hard way. But let’s generously assume that time (years of it) heals all fragments or allows them to become equivalent.

WebKit has eight build systems, which cause perverse behavior. Peel back the covers and you’ll find tension about past code forks: V8 vs. Apple’s JavaScriptCore (“Nitro”), iOS (in a secret repository at apple.com for years, finally landing), various graphics back ends, several network stacks, two multi-process models (Chrome’s, stealth-developed for two years; and then Apple’s “WebKit2” framework). Behind the technical clashes lie deep business conflicts.

When tensions run high the code sharing is not great. Kudos to key leaders from the competing companies for remaining diplomatic and trying to collaborate better.

Don’t get me wrong. I am not here to criticize WebKit. It’s not my community, and it has much to offer the world on top of its benefits to several big companies — starting with providing a high-quality open-source implementation of web standards alongside Mozilla’s. But it is not the promised land (neither is Mozilla).

If you are interested in more, see Eric Seidel’s WebKit wishes post for a heartfelt essay from someone who used to work at Apple and now works (long time on Chrome) at Google.

My point is that there’s no “One WebKit”. Distribution power matters (more below near the end of this essay). Conflicting business agendas hurt. And sharing is always hard. There are already many cooks.

In software, it’s easier in the short run to copy design than code, and easier to copy code than share it. In this spirit the open-source JS engines (two for WebKit) have learned from one another. Mozilla uses JSC’s YARR RegExp JIT, and we aim to do more such copying or sharing over time.

Web Engine Trade-offs

Some of you may still be asking: beyond copying design or sharing pieces of code, why won’t Mozilla switch to WebKit?

Answering this at the level of execution economics seems to entail listing excuses for not doing something “right”. I reject that implied premise. More below, but at all three levels of vision, strategy, and certainly execution, Mozilla has good reasons to keep evolving Gecko, and even to research a mostly-new engine.

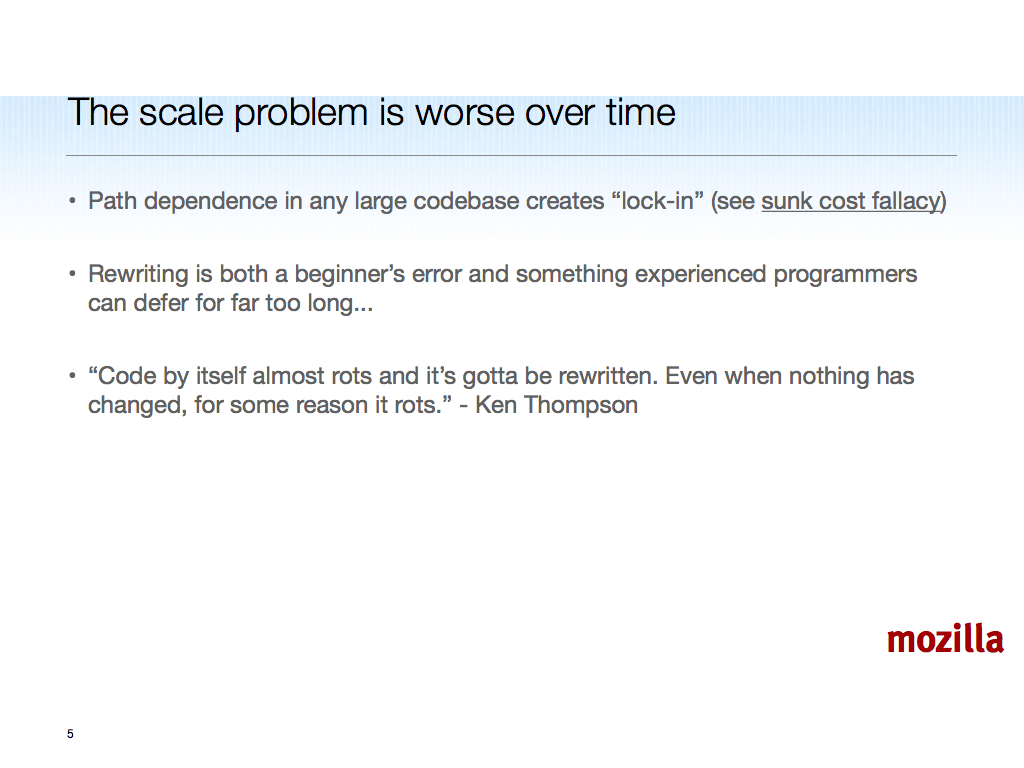

But to answer the question “why not switch” directly: the switching costs for us, in terms of pure code work (never mind loss of standards body and community leverage), are way too high for “switching to WebKit” on any feasible, keep-your-users, current-product timeline.

XUL is one part of it, and a big part, but not the only large technical cost. And losing XUL means our users lose the benefits of the rich, broad and deep Firefox Add-ons ecosystem.

In other words, desktop Firefox cannot be a quickly rebadged chromium and still be Firefox. It needs XUL add-ons, the Awesome Bar, our own privacy and security UI and infrastructure, and many deep-platform pieces not in chromium code.

Opera, as a pure business without all of XUL, the Mozilla mission, and our community, has far lower technical switching costs. Especially with Opera desktop share so low, and Opera Mini as a transcoding proxy that “lowers” full web content and so tends to isolate content authors from “site is broken, blame the browser” user-retention problems that afflict Opera’s Presto-on-the-desktop engine and browser.

Nevertheless, my sources testify that the technical switching costs for Opera are non-trivial, in spite of being lower relative to Mozilla’s exorbitant (multi-year, product-breaking) costs. This shows that the pure “business case” prevailed: Opera will save engineering headcount and be even more of a follower (at least at first) in the standards bodies.

The Big Picture

At the Mozilla mission level, monoculture remains a problem that we must fight. The web needs multiple implementations of its evolving standards to keep them interoperable.

Hyatt said pretty much the last sentence (“the web needs more implementations of its evolving standards to keep them interoperable “) to me in 2008 when he and I talked about the costs of switching to WebKit, technical and non-technical. That statement remains true, especially as WebKit’s bones grow old while the two or three biggest companies sharing it struggle to evolve it quickly.

True, some already say “bring on the monoculture”, imagining a single dominant power will distribute and evergreen one ideal WebKit. Such people may not have lived under monopoly rule in the past. Or they don’t see far enough ahead, and figure “après moi, le déluge” and “in the long run, we are all dead.” These folks should read John Lilly’s cautionary tumblr.

@andreasgal notes that the W3C tries not to make a standard without two or more interoperating prototype implementations, and that this now favors independent Gecko and WebKit, since the only other major engine for WebKit to pair with is Microsoft’s Trident. We shall see, but of course sometimes everyone cooperates, and politics makes strange bedfellows.

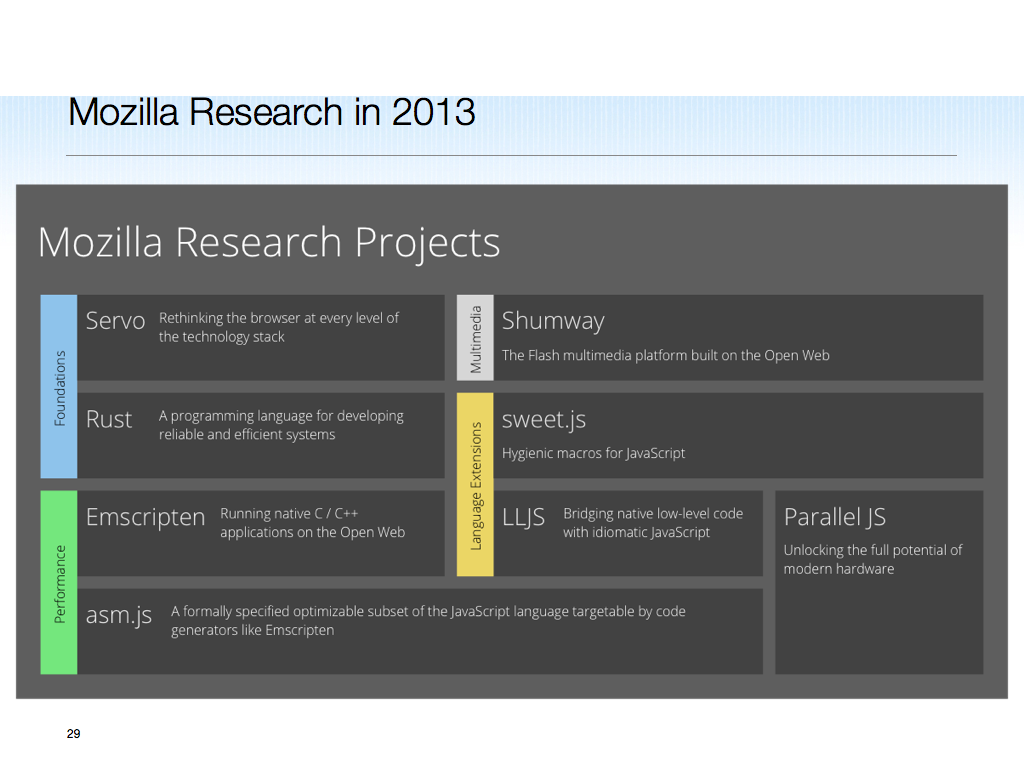

I expect more web engines in the next ten years, not fewer, given hardware trends and the power wall problem. In this light, Mozilla is investing not only in Gecko now, we are also researching Servo, which focuses on the high-degree parallel (both multicore CPU, and the massively parallel GPU) hardware architectures that are coming fast.

If we at Mozilla ever were to lose the standards body leverage needed to uphold our mission, then I would wonder how many people would choose to work for or with us. If Servo also lacked research partners and good prospects due to changing technology trends, we would have more such retention troubles. In such a situation, I would be left questioning why I’m at Mozilla and whether Mozilla matters.

But I don’t question those things. Mozilla is not Opera. If we were a more conventional business, without enough desktop browser-market share, we would probably have to do what Opera has done. But we’re not just a business, and our desktop share seems to be holding or possibly rising — due in part to the short-term wins we have been able to build on Gecko.

Future Web Engines

So realistically, to switch to WebKit while not dropping out of the game for years, we would have to start a parallel stealth effort porting to WebKit. But we do not have the hacker community (paid and volunteer) for that. Nowhere near close, given XUL and the other dependencies I mentioned, including the FFOS ones. (Plus, we don’t do “stealth”.)

The truth is that Gecko has been good for us, when we invest in it appropriately. (There’s no free lunch with any engine, given the continuously evolving Web.) We could not have done Firefox OS or the modern Firefox for Android without Gecko. These projects continue to drive Gecko evolution.

And again, don’t forget Servo. The multicore/GPU future is not going to favor either WebKit or Gecko especially. The various companies investing in these engines, including us but of course Apple, Google, and others, will need to multi-thread as well as process-isolate their engines to scale better on “sea of processors” future hardware.

There’s more to it than threads: due to Amdahl’s Law both threads and so-called “Data Parallelism”, aka SIMD, are needed at fine grain in all the stages of the engine, not just in image and audio/video decoding. But threads in C++ mean more hard bugs and security exploits than otherwise.

I learned at SGI, which dived into the deep end of the memory-unsafe SMP kernel pool in the late ’80s, to never say never. Apple and Google can and probably will multi-thread and even SIMD-parallelize more of their code, but it will take them a while, and there will be productivity and safety hits. Servo looks like a good bet to me technically because it is safer by design, as the main implementation language, Rust, focuses on safety as well as concurrency.

Other approaches to massively parallel hardware are conceivable, and the future hardware is not fully designed yet, so I think we should encourage web engine research.

Strategic Imperatives

Beyond these considerations, we perceive a new strategic imperative, both from where we sit and from many of our partners: the world needs a new, cleanish-slate, safer/parallel, open-source web engine project. Not just for multicore devices, but also — for motivating reasons that vary among us and our partners — to avoid depending on an engine that an incredibly well-funded and lock-in-prone competitor dominates, namely WebKit.

Such a safer/parallel engine or engines should then be distributed and feed back on web standards, pushing the standards in more declarative and implicitly parallelizable directions.

Indeed more is at stake than just switching costs, standards progress, and our mission or values.

One pure business angle not mentioned above is that using an engine like WebKit (or what is analogous in context, e.g., Trident wrappers in China) reduces your product to competing on distribution alone. Front end innovations are not generally sticky; they’re in any event easy to clone. Consider Dolphin on Android. Or observe how Maxthon dropped from 20+% market share to below 1% in a year or so in China. Observe also the Qihoo/360 browser, with not much front end innovation, zooming from 0 to 20% in the same time frame.

Deep platform innovations can be sticky, and in our experience, they move the web forward for everyone. JS performance is one example. Extending the web to include device APIs held back for privileged native app stacks is another. So long as these platform innovations are standards-based and standardized, with multiple and open source implementations interoperating, and the user owns vendor-independent assets, then “quality of implementation and innovation” stickiness is not objectionable in my view.

Back to Mozilla. We don’t have the distribution power on desktop of our competitors, yet we are doing well and poised to do even better. This is an amazing testimony to our users and the value to them of our mission and the products we build to uphold it. In addition to Firefox on the desktop, I believe that Firefox OS and Firefox for Android (especially as a web app runtime) will gain significant distribution on mobile over the next few years.

Take Heart

So take heart and persevere. It is sad to lose one of the few remaining independent web platforms, Presto, created by our co-founder of HTML5 and the unencumbered <video> element. I hope that Opera will keep fighting its good fight within WebKit. Opera fans are always welcome in Mozilla’s community, at all levels of contribution (standards, hacking, engagement).

We don’t know the future, but as Sarah Connor carved, “No fate but what we make.” Whatever happens, Mozilla endures.

/be

Other voices: