I took time away from the Mozilla all-hands last week to help out on-stage at the Intel Developer Forum with the introduction of RiverTrail, Intel’s technology demonstrator for Parallel JS — JavaScript utilizing multicore (CPU) and ultimately graphics (GPU) parallel processing power, without shared memory threads (which suck).

Then over the weekend, I spoke at CapitolJS, talking about ES6 and Dart, and demo’ing RiverTrail to the JS faithful. As usual, I’ll narrate my slides, but look out for something new at the end: a screencast showing the RiverTrail IDF demo.

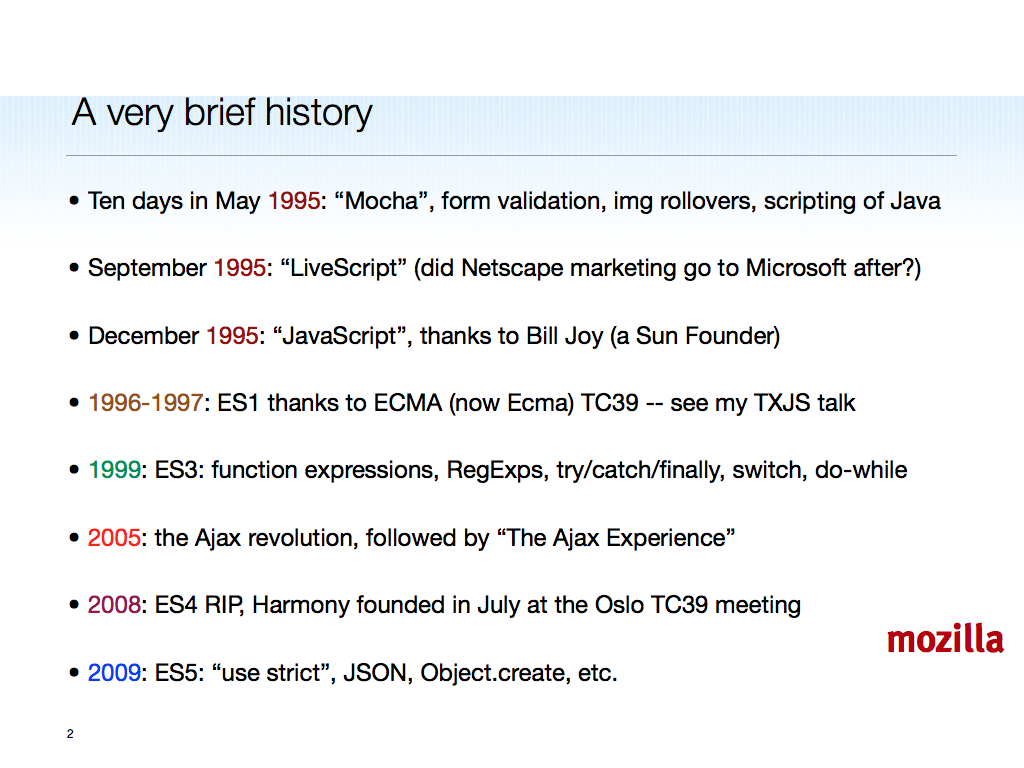

I had a lot to cover in a half-hour (a good talk-time in my view — we’ll see how the video comes out, but I found it invigorating). CapitolJS had a higher-than-JSConf level of newcomers to JS in attendance, so I ran through material that should be familiar to readers of this blog, presented here without much commentary:

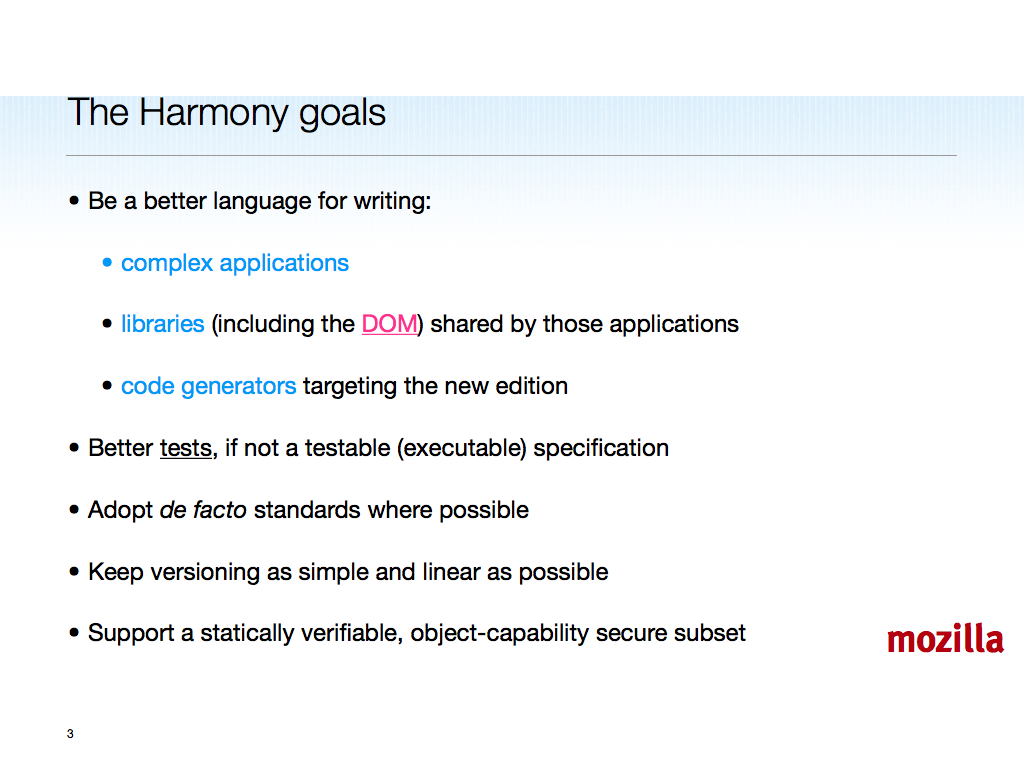

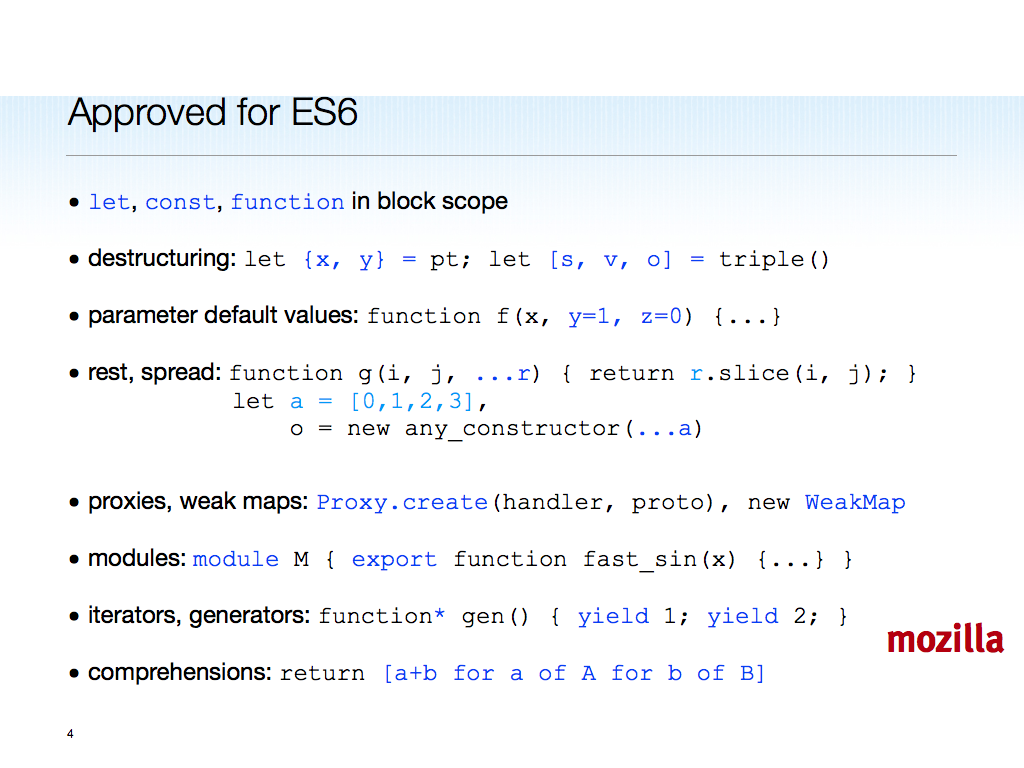

The meaning of my color-coding should be intuitively obvious ;-).

BTW, dom.js, with its Proxy usage under the hood, is going great, with David Flanagan and Donovan Preston among others hacking away on it, and (this is important) providing feedback to WebIDL‘s editor, Cameron McCormack.

Here I must add the usual caveat that “ES6” might be renumbered. Were I more prudent, I’d call it “ES.next”, but it’s highly likely to be the 6th edition, and you’re all sophisticated close readers of the spec and its colorful history, right?

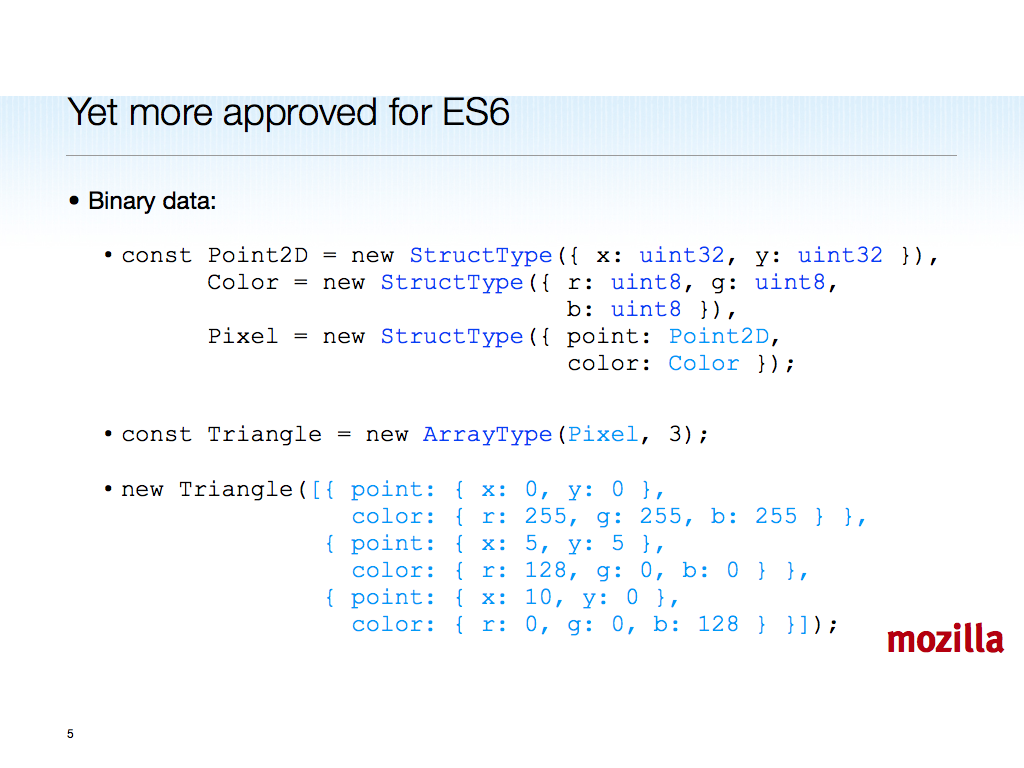

The SpiderMonkey bug tracking binary data prototype implementation is bug 578700.

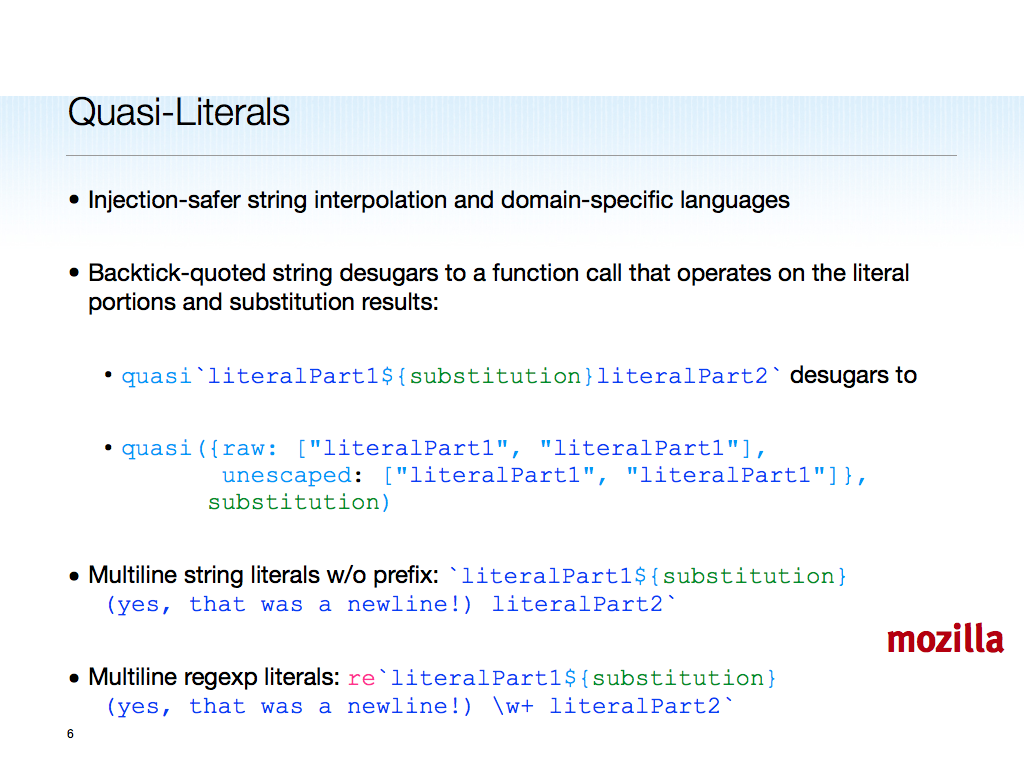

The SpiderMonkey bug tracking quasi-literal prototype implementation is bug 688857.

The @ notation is actually “out” for ES6, per the July meeting (see notes). Thanks to private name objects and object literal extensions (see middle column), private access syntax is factored out of classes. Just use this[x] or p[x], or (in an object literal for the computed property name, which need not be a private name) [x]: value.

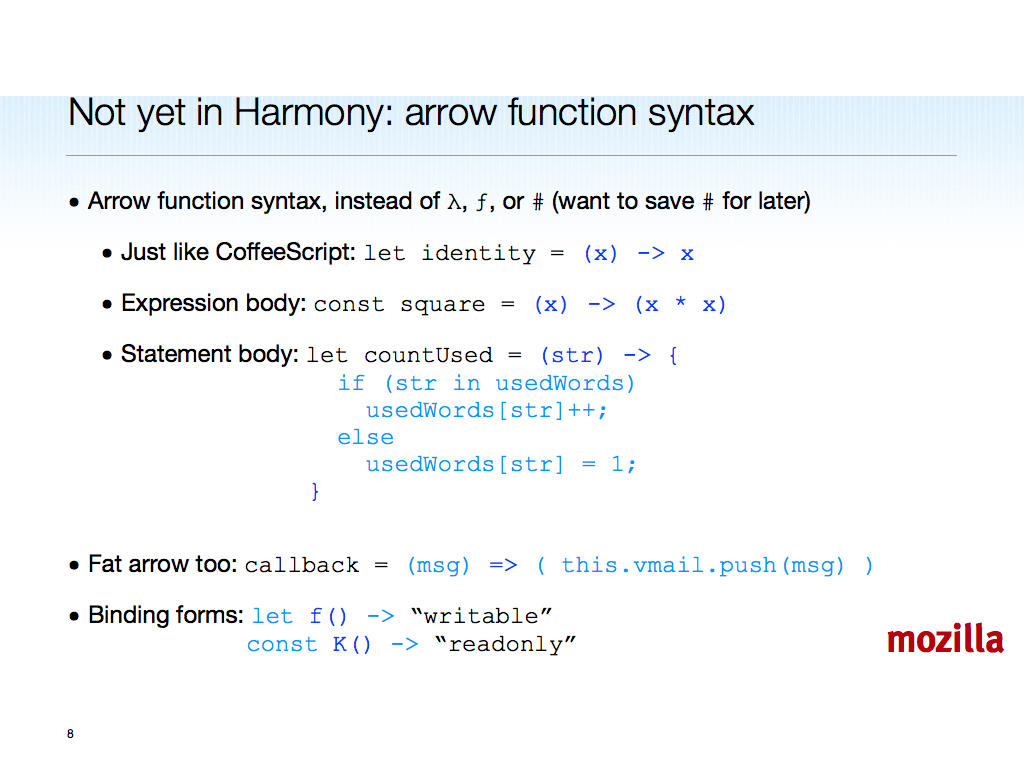

As close to CoffeeScript as I can get them, given JS’s grammar and curly-brace (not indentation-based) block structure.

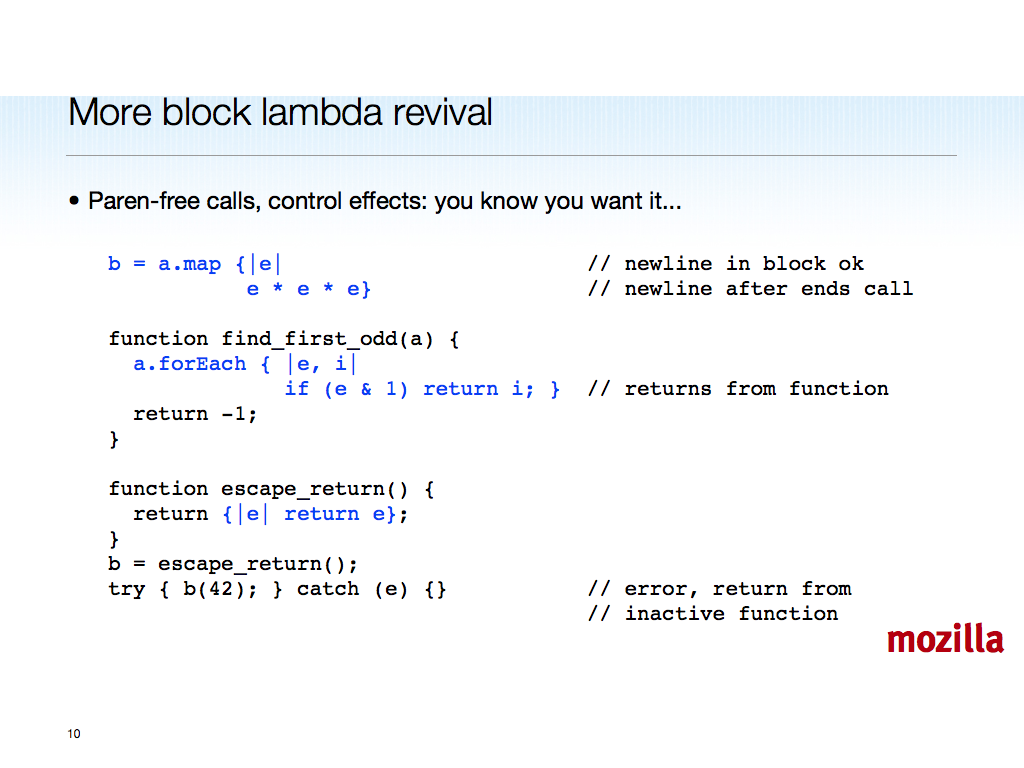

Ruby-esque, Smalltalk is the grandfather.

You know you want it.

I’ve been clear about preferring block-lambdas over arrows for their added semantic value and compelling syntax mimicking control statements. It’s good to hear @jashkenas agree in effect.

@mikeal is right that JS syntax is not a problem for some (perhaps many) users. For others, it’s a hardship. Adding block-lambdas helps that cohort, adds value for code generators (see Harmony Goals above), and on balance improves the language in my view. It won my straw show-of-hands poll at CapitolJS against all of arrows, vaguer alternatives, and doing nothing.

The epic Hacker News thread on my last blog post in relation to Dart needs its own Baedeker. For now I’ll just note that Dart and the politics evident in the memo are not making some of my standards pals who work for other browser vendors happy. Google may fancy itself the new Netscape, but it doesn’t have the market share to pull off proprietary power-move de facto standards.

The leaked memo makes some observations I agree with, some unbacked assertions about unfixable JS problems that TC39 work in progress may falsify this year, and a few implicit arguments that are just silly on their face.

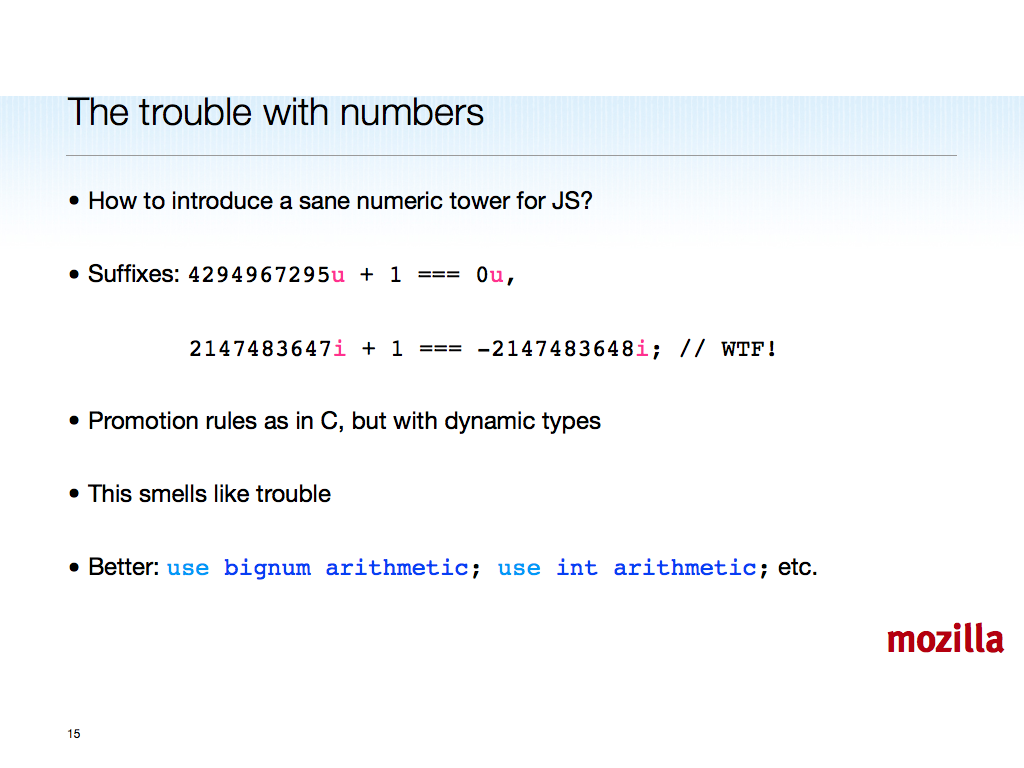

Still, I think we should react to valid complaints about JS, whatever the source. The number type has well-known usability and (in practice, in spite of aggressively optimizing JIT-compiling VMs) performance problems.

The last bullet shows pragmas for switching default numeric type and arithmetic evaluation regime. This would have to affect Number and Math, but lexically — no dynamic scope. Still a bit hairy, and not yet on the boards for Harmony. But perhaps it ought to be.

Links: [it’s true], [SpiderMonkey Type Inference].

Coordinated strawman prototyping in SpiderMonkey and V8 is a tall order. Perhaps we need a separate jswg.org, as whatwg.org is to the w3c, to run ahead? I’ve been told I should be BDFL of such an org. Would this work? Comments welcome.

Remember, ridiculously parallel processing power is coming, if not already present, on your portable devices. It’s here on your laptops and desktops. The good news is that JS can exploit it without your having to deal with data races and deadlocks.

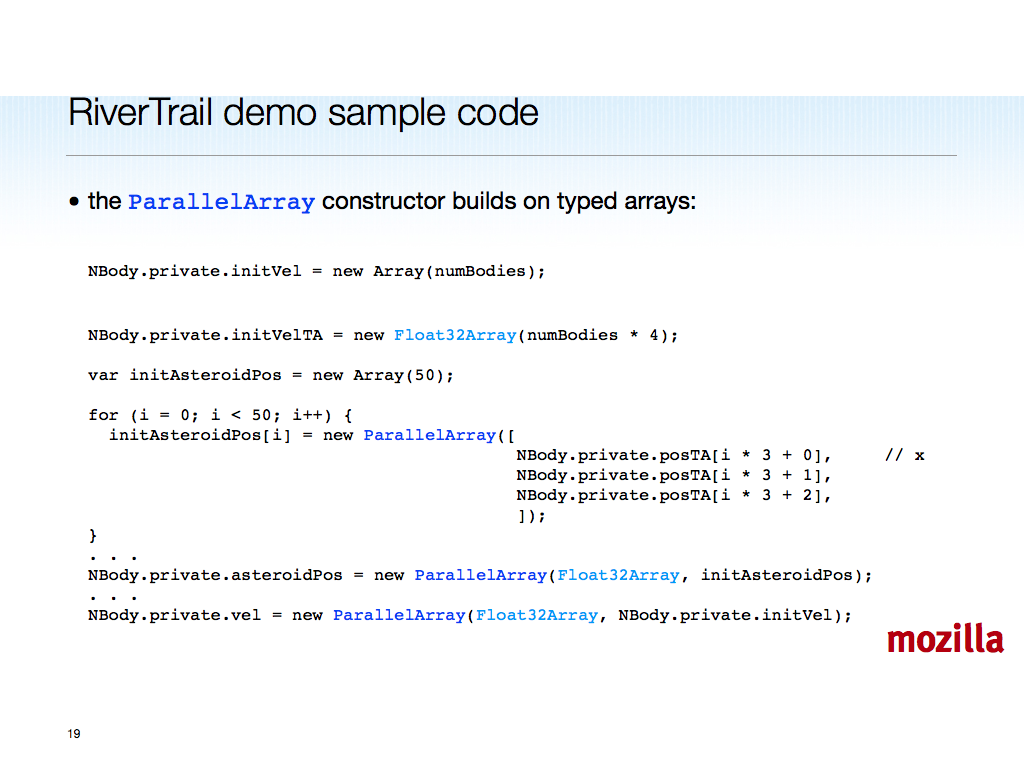

RiverTrail is a Narcissus-based JS to OpenCL compiler, packaged as a Firefox add-on. It demonstrates the utility of a new ParallelArray built-in, based on typed arrays. The JS-to-OpenCL compiler automatically multicore-short-vectorizes your JS for you.

As noted in the previous slide, because the ParallelArray methods compile to parallelized folds (see Guy Steele’s excellent ICFP 2009 keynote), associative operations will be reordered, resulting in small non-deterministic floating point imprecision errors. This won’t matter for graphics code in general, and it’s an inevitable cost of business using parallel floating point hardware.

The code looks like typical JS, with no hairy callbacks, workers, or threads. It requires thinking in terms of immutable trees and reductions or other folds, but that is not a huge burden. As Guy’s talk makes plain, learning to program this way is the key to parallel speedups.

Here is my screencast of the demo. Alas, since RiverTrail currently targets the CPU and its short vector unit (SSE4), and my screencast software uses the same parallel hardware, the frame rate is not what it should be. But not to worry, we’re working on GPU targeting too.

At CapitolJS and without ScreenFlow running, I saw frame rates above 35 for the Parallel demo, compared to 3 or 2 for Sequential.

The point of a technology demonstrator is to show where real JS engines can go. Automatic parallelization of ParallelArray-based code can be done by next year’s JS engines, based on this year’s Firefox add-on. We’re very close to exploiting massively parallel hardware from JS, without having to write WebCL and risk terrible safety and DoS bugs.

To close, I sought inspiration from Wesley Snipes in Passenger 57. Ok, not his best movie, but I miss the ’90s action movie era.

Seriously, the shortest path on the Web usually is the winning play. JS is demonstrably able to grow new capabilities with less effort than a “replacement” entails. Always bet on JS!

/be

How many in the audience assented to “You know you want [Block-level lambdas]”? How many were cowed into nodding by BFDL-aura 🙂

Not to exhume a 4-year old horse, but how does RiverTrail relate to your promises back in “Theads suck”, to wit:

So here’s a promise about threads, one that I will keep or else buy someone a trip to New Zealand and Australia (or should I say, a trip here for those over there):

JS3 will be ready for the multicore desktop workload.

…

Most high-level concurrent Mozilla programming will be in JS3 on the back of an evolved Tamarin.

“We’re very close to exploiting massively parallel hardware from JS, without having to write WebCL and risk terrible safety and DoS bugs.”

This makes it sound like you’d rather not have WebCL in the browser at all. I hope you will reconsider this perspective.

OpenCL is phenomenal language: fast, clear, unsurprising, open, and universally supported. If you are worried about security, then I submit that OpenCL running in a sandbox is still going to be faster in practice than anything that can be done in a general-purpose high-level language.

I hope you will also consider that WebCL may be crucial as our best defense against NaCl, Dash, and other efforts that would damage the open-source single-platform web with fragmentation and vendor lock-in in the name of speed.

I think it would definitely be good to have an equivalent of the WHATWG for ECMA. This would allow new features to be frequently added with less sacrifice in consistency across implementors.

@skierpage: I always do the function syntax straw poll — it’s non-binding, relax. Doing nothing is not the winner, though. So what is your preference?

My prognostications about Tamarin were already looking shaky in 2007, as Adobe had the top hackers working on a secret Tamarin-Tracing fork that didn’t pan out. This left Tamarin not well-maintained or evolving in the open. Meanwhile, SpiderMonkey and JSC had to speed up on dynamic JS, and by 2008 were faster on untyped code than Tamarin, in spite of the latter’s JIT.

That, plus ES4 failing and Adobe pulling back from Ecma TC39, meant no JS3 on Tamarin.

BTW, at this point, presuming Harmony continues, there’s no gain in keeping JS’s version number line different from ES’s. So I am thinking we should jump to “6” for JS.next to match ES.next (assuming 6 holds).

Anyway, ParallelArray is not exactly what I had in mind in projecting a bright non-threaded JS multicore future all those years ago. I was thinking that between immutability, move semantics (hand-off), actor-like (lighter than workers) concurrency, and stuff that was trendy at the time (STM), JS would utilize parallel hardware without exposing shared-memory threads. I still believe this, modulo STM not scaling or composing well.

@Ben: I didn’t say WebCL should be rejected. I view it as inevitable, like WebGL, and a good low-level language. But it’s not ever going to be as productive to code in for the greater group of JS hackers who might use ParallelArrays or other high-level approaches yet to be designed.

Just as raw WebGL is painful and high-level scene graph libraries are emerging, so too WebCL is painful in the same way C is (OpenCL is a mutated C99 subset). I’m an old C masochist, but even I can’t recommend WebCL as the *only* way.

We do need GPUs to grow up and, in spite of the massively greater state sizes, support OS context switching and preemption, to address the DoS problem. And I’m not sure how WebCL’s out of bounds addressing capabilities can be sandboxed or verified efficiently. This is a problem with closer CPU/GPU integration and more shared virtual memory.

@Jason: would the jswg.org have to produce a JS’ to JS compiler, or even patches for the three open source engines? I’d need to organize some savvy, socially adept hackers to do the latter.

/be

Are we at a stage that we can do linear algebra and numerical analysis in javascript. Can you talk more about the future of JS in the scientific and engineering applications in the browser.

Is a JSWG really needed? For the mainstream, ECMAScript.next (plus possibly block lambdas and type guards) fixes most deficiencies. I don’t think we need to go faster than the current TC39 speed. Don’t forget that the rest of the world is just now catching up with ECMAScript 5 (example: unbelievably, there are people who are scared of strict mode!). By the time ES.next is finished, ES5 will be widely understood and adopted, so that works fine. Going slow, but not too slow, does have its advantages! Or would a JSWG just push ES.next?

If it’s not about pushing ES.next, then there are two areas where I think that hacker energy would be spent better:

– Desktop JavaScript: desktop APIs + tool that turns a webapp into a first-class desktop app. Right now, my favorite would be to package Node.js code with an HTML5 engine into a desk app, as that would allow one to deploy either on a server or a desktop.

– IDE: Write an IDE that is really tailored for JavaScript’s needs, instead of copying what’s done in the Java world. Prove to the world that ES.next has all the “toolability” that one needs. IMHO that would include to (at least initially) decide on a single documentation standard (e.g. JSDoc) and a single way of unit testing.

Numeric tower: I don’t mind number literal suffixes (Java has them). I would find the pragmas far more confusing.

If there is a greater variety of numeric types – is it really about literals? Or is it mainly about computing with values? Would it be a problem if these additional numeric values looked like objects? For example:

var a = new BigNum(344);

var b = BigNum.parse(inputString);

var c = a.times(b);

But I have no idea what causes performance problems. Is it because one uses floats for int operations?

@Axel

“Desktop JavaScript: desktop APIs + tool that turns a webapp into a first-class desktop app. Right now, my favorite would be to package Node.js code with an HTML5 engine into a desk app, as that would allow one to deploy either on a server or a desktop.”

Yes! I’ve been preaching about that for a couple of months now. The Mozilla Prism project should be brought back from the dead with that mentality

@MySchizoBuddy

I study physics and I have done some simple numerical approximations in JavaScript in the browser with dynamic plots without much trouble. I will probably be doing more complex stuff next year and I’m looking at Workers for splitting long calculations into chunks. I believe we’ll be getting there by next year and getting decent performance in command line environments like NodeJS. In the end I think C will remain the go to language for doing the actual math, but JS will probably be a great language for analyzing the results.

@Axel: “(plus possibly block lambdas and type guards)” is a big stretch. To get either into ES.anything will require prototyping and user-testing ahead of the agreed-upon proposals, which will soak up all the near-term energy in SpiderMonkey and V8 as far as I can see (without more hackers).

The jswg.org idea may need to go beyond whatwg.org, and produce patches or even “mods” to existing deployed engines, not just specs.

I see many IDEs under way and in use. Still I agree jswg.org should consider tooling throughout, and collaborate on analyses and prototypes that connect tools, users, and the evolving core JS language design.

On adding number types: yes, operator syntax is needed (see, if you haven’t, https://jroller.com/cpurdy/entry/the_seven_habits_of_highly1). Beyond that, you are not addressing the issue of promotion from narrower to wider types, and signed vs. unsigned, that must be done implicitly or (this could hurt!) explicitly. What are the evaluation semantics of mixing int32, uint32, int64, uint64, double, bignum, etc.?

The advantage of the “use xxx arithmetic” pragma is that it changes the “number” typeof-type consistently from double to another type (xxx). No mixing, just better (or faster yet worse, e.g. int32) semantics.

@MySchizoBuddy: people are doing numerically intensive code in JS, e.g. https://sylvester.jcoglan.com/ and https://www.numericjs.com/ — plus, consider all the 2D and 3D game development using JS.

Challenges obviously include not just double as the sole number type, but typed arrays requiring heap allocation, taxing both the GC and the programmer (lack of value semantics, easy stack or other “interior” allocation). See https://wiki.ecmascript.org/doku.php?id=strawman:value_proxies.

/be

@Brendan: Thank you for responding. I was thinking that implementors would each add extensions to ES on their own, see if authors liked them, and, if so, go to the jswg to get them standardized. The jswg standards could then be implemented by other implementors and/or be incorporated into ES7.

@Ben: btw, WebCL Mozilla implementation under way:

https://hg.mozilla.org/projects/webcl/

@Jason: uncoordinated competing vendor extensions tend to make a mess compared to the situation where vendors can agree on goals, anti-goals, design philosophy, and ways (including socializing!) to work better together. I think that wins, the overhead is not high and it builds trust and coordinates effort that no one company or group can bring to bear.

Note that this is not design-by-committee — the proposals come from one or two people, and they act as champions who own the design details. But the larger group of vendors and other interested parties can see everything (transparency), review, critique, enthuse, and suggest improvements.

The whatwg process also tries to make coordinated sub-specs and win vendor buy-in earlier rather than later. This doesn’t always work out. Nothing does. Letting slip the browser vendor dogs of war looks strictly worse.

What jswg.org could add would be a new community going beyond what TC39 can agree to for a given gulp, trying to elevate or rescue strawmen who missed the last cut-off, and (in case this matters) the BDFL deciding something to cut off endless debate (usually but not always bikeshedding). Also, jswg.org would take all well-behaved individuals as members in good standing, no pay to play.

/be

“What jswg.org could add would be a new community going beyond what TC39 can agree to for a given gulp, trying to elevate or rescue strawmen who missed the last cut-off, and (in case this matters) the BDFL deciding something to cut off endless debate (usually but not always bikeshedding). Also, jswg.org would take all well-behaved individuals as members in good standing, no pay to play.”

=> To some extent (though not entirely), your description of jswg.org reminds me a lot of commonjs.

A natural path that the WHATWG took was to make HTML a living spec.

Meanwhile, CSS3 has been splitted into modules.

DOM4 (DOM core) is the only DOM part I know with a “4” as version number.

ECMAScript remains the last “one-block versioned” spec. The way you see it, would the role of the jswg to turn JS into a living spec?

Also, the champion model that TC-39 has adopted looks very much like a module system. Parts could be adopted independently. I’m not sure object literal, tail calls, shorter function syntax, proxies or quasis are at the same level of maturity now. Will they all be ready for ES6? Regardless, as usual, they are going to be implemented and adopted independently (except for syntax for which I can’t see of another way than adopting it all at once).

@David: language design involves balancing global accounts, enforcing non-modular properties (cross-cutting concerns including security properties, usable and consistent syntax). Champions rely on modularity of overt “feature” but without a group of experts interacting with the champions, it’s easy to go wrong. IMHO we saw this with scoped object extensions (which bounced off both TC39 and, I’m told, V8 maintainers) and deferred functions.

/be

@Brendan: “To get either into ES.anything will require …” Ah! Didn’t know it would tax existing resources that much. Then I’m all for a JSWG with a good philosopher king.

“I see many IDEs under way and in use. Still I agree jswg.org should consider tooling throughout …” True, there are many IDEs, but most of them are just clones of Eclipse. Maybe the role of a JSWG should be to spread the dynamic (as in “not edit-compile-debug”) gospel. That is, it would ensure that the lessons learned from Common Lisp, Dylan, Smalltalk, Self, Racket, Newspeak, Erlang etc. are not forgotten. Possibilities: Writing vision papers (sketching aspects of a “perfect JS IDE”), curating a reading list of papers on IDE research, documenting best JS practices, writing small prototypes.

Numbers: I don’t feel competent enough to suggest anything in this domain, but I’ll still do it:

– double and int (int32?) should be part of the core language and should take care of most mainstream uses.

– With stuff such as bignums, it has been my impression that computation mostly stays within a given type and only literals are “imported” every now and then. That would mean that explicit conversion would be OK. On the other hand, using a small int for a loop counter where the loop body deals with bignums seems a common scenario. That is, mixing int and float sizes should be supported. And I’m not sure that can be done with a per-file pragma.

Tangent: Operator-wise, it would be cool to have Common-Lisp-style generic functions (=multiple dispatch) whose names can optionally be written infix. Maybe in ECMAScript 12? 😉

@Axel: pragmas are per-block (including implicit top-level block).

Adding some new types with implicit conversions, others without, smells like trouble. If we get a decimal type (as a library using value proxies?), it will want implicit conversion from int to decimal. int64 is hot too.

Also, uint32 and uint64 would be wanted along with signed forms. Finally, GPUs want float32 (if not float16). Hence the post-ES6 agenda item to work on value types or proxies, with operator and literal support.

We had multi-methods for ES4, but my sense is that no one on TC39 wants to add another dispatch mechanism. Maybe ES12 😉 but do not hold your breath.

/be

Brendan,

Correct me if I’m wrong, but can’t we already write parallel programs in JS with web workers and Node.js?

Assuming you know the number of cores available (and, especially in SSJS/Node, this is more predictable), what we’re getting with RiverTrail is already more or less possible. Sure, the benchmarks may not be as fancy, but the core functionality is already there.

The only problems I foresee are:

1) Syntactical shortcomings – e.g. absence of actors

2) The DOM does not expose the number of cores available. Consider adding some read-only system information to Gecko DOM – e.g. CPU clock speed, RAM, available RAM, CPU usage, etc?

I have to agree with @Axel, that a per-file pragma and no ability to, for example, use int32 values to compute colors and doubles to compute positions in the same file would be a harsh limitation.

How would this affect interaction with native interfaces (e.g. the DOM or node’s api)? I assume that many native methods and properties would require type guards (or else type conversions) and if canvas.width is an int32 does that force me to “use int32 arithmetic;” for any file that needs to resize a canvas?

@Ranger: workers as currently implemented do not scale or compose well, you have to do structured cloning, which means in practice that you have to copy or serialize.

Actors are great, they need hand-off (move semantics) and not just immutability (see Rust; also recent Erlang benchmarking results).

Exploiting SIMD data parallelism, with CPUs and GPUs, does not require high-level worker or actor notions as such. Yes, you could compile such things to the GPU, but it is strictly more work, requiring mapping closures (JS functions in general) and mutable data to the GPU and partitioning mutable memory somehow.

ParallelArrays, being immutable, avoid this barrier to implementation and adoption. The shorter path usually wins.

@Prestaul: pragmas are block-scoped, not per-file.

Anyway, I was not suggesting that pragmas are the *only* way to add new numeric types, but they do (as sketched here) avoid the mixing and promotion-rule perplex.

Computing int32 vs. double has two aspects. Storage, which binary data addresses; and arithmetic evaluation rules, where we either have implicit conversion rules, or we require explicit conversions. Which do you want?

/be

@axel Multimethods (except for infix syntax) could be added to JS with a library. I would be interested in seeing a library for doing multiple dispatch. If nobody else does it, I’ll probably do it someday.

One way to do it would be to dispatch based on the value of the “constructor” attribute of the arguments (or typeof for basic types). For a language as dynamic as javascript, predicate dispatch would be nice and also easy, although it would be extremely slow.

@Brendan, wouldn’t block scoped pragmas still leave us with many of the issues we might have if they were file scoped? Granted, pragmas simplify implementation for vendors, but the limitations imposed would be too severe for many to overlook. If I have an object wrapping a Float64Array would I not be able to write a method that takes an integer index and a double value?

I would love to have implicit conversion rules in place with explicit conversions used when the developer needs more control. As in, int + double => double unless I cast my double to an int first. Such promotion rules are usually clear and shouldn’t surprise anyone familiar with the current paradigm in which string + number => string. When you talk about type mixing and promotion, is the concern over performance, syntax, or developer expectations?

@Ranger “Parallel programs” is not a unique use case.

Sometimes, you want to apply the same program to a huge amount of data (SIMD). Hardware-wise, GPUs are great for this, multi-core CPU not that much

Sometimes you want different “computation units” to run different things.

GPU are very inefficient for these, CPU are very good.

Sometimes you may even want something else.

Programming languages and framework/libraries follow the same thing. Workers are really good to run different code, but are not really suitable for heavy parallel computing (they lack synchronisation, they don’t share data).

As far as I know, node.js does not allow parallel programming unless you create several instances (or with a C/C++ plugin).

JSWG could be a truly awesome addition to the web/JS development community. It seems to me that community input has until now largely been taken up by the informal process of adoption and development of idioms, libraries, &c. — which was well-suited to a younger web, but does not serve a world with as many complex, performance-intensive and security-sensitive applications.

This is intriguing, but I don’t really get the opposition to threads (with shared memory – which suck, apparently). On GPU it is especially necessary because thread IDs and shared memory provide a mechanism for implementing efficient scan. If you can decompose a sequential algorithm into map and scan-like operations (reduce, split, etc), you can easily parallelize the algorithm, because the parallel upsweep-reduce-downsweep scan runs efficiently on many parallel cores/lanes with shared memory. You really can’t solve sequential problems without this pattern.

I could see pre-defined data-parallel primitives included that would increase the flexibility of this greatly. A purely functional approach just isn’t all that flexible (no matter what the functional nerds claim).

sean

moderngpu.com

Could number types be per module? Suppose that some module system gets implemented, and something like this is possible

Int = require(“integer”);

Bignum = require(“bignum”);

let x = Int(20);

let y = Int(30);

let z = x + y; //50;

let a = 20;

let b = x + a //convert non explicit to explicit type via static analysis?

let c = Bignum(55);

let d = x+a+c; //promote to bignum?

let e = Number(22); //the others look like this.

It’s all bikeshedding of course. Getting other number types into JS is not an easy problem to solve. The JS engine in apple’s quartz compose solved it by adding type annotations in function parameter notation: I.E. :

__structure function (__int x, __string y) {

return [x,y]

}

That is, these notations are only allowed for function return values and parameters, and cannot be arbitrarily declared in the body of the function. It seems rather elegant actually, as it enables the JS function to interface neatly with other software environments (that is, OpenGL/GLSL kernels), keeping type casting/conversion strictly at function boundaries.

@SeanBaxter

Threads are extremely flexible and efficient. But they aren’t suitable for mortal minds. That’s the problem. We need safe abstractions, and no the abstractions won’t be as flexible as threads. But we need to prioritise safety about flexibility. People’s lives are literally at stake. Race conditions can kill.

For eg you have a 10,000X10,000 dense matrix and you want to just square the individual elements of the matrix. One way is to initiate 10,000 instances of nodejs and run it on Amazon Compute servers. Where each nodejs instance is incharge of squaring 10,000 vectors.

Is there any library available that will do this slicing of data starting node and shipping it to amazon for computation

sorry nodejs will be running on amazon cloud compute node not the local computer.

So ‘this’ keyword inside nested functions will be still broken in ES6? Will we have to still use ugly hacks such as assigning ‘this’ to another variable, using bind() or even fat arrows?

@Jarek, I think you might find a lot of JS developers who would disagree that ‘this’ behavior is “broken”. The rules for how ‘this’ is bound are well defined, consistently implemented, and simple enough to understand.

I can understand why you might not like assigning ‘this’ to another variable or why you might tire of ‘bind’, but the simplicity of fat arrows would be hard to improve on… It doesn’t break existing functionality (a reasonable requirement) and it gives a clean but explicit way to bind your functions. Do you have another proposal?

Perhaps I’m not experienced enough, but so far there wasn’t even a single situation where I would find dynamically scoped ‘this’ to be useful. Most of the time it’s just a source of easy to make bugs.

My personal proposal: Introduce new ‘that’ keyword that would work just as anyone new to JS would expect: it always points to the non-function object inside which current function was declared.

I guess this is something feasible for TC39 considering the fact that ‘var’ was already evolved into ‘let’.

I understand why you might feel that way, but the truth is that late binding of “this” is what makes prototypal inheritance possible.

Look at the following example and try to picture how this would work if “this” was not bound dynamically when each method was called:

var proto = {

getName: function() { return this.name; },

getAge: function() { return this.age; }

};

// At this point was does “this” in getName point to? would you bind it to proto?

function Person(name, age) {

this.name = name;

this.age = age;

}

Person.prototype = proto;

// How about now? Does “this” still == proto?

function Dude(name, age) {

Person.call(this, name, age);

}

Dude.prototype = new Person();

// And now? what is “this” bound to at this point?

Dude.prototype.getName = function() { return “Mr. ” + this.name; };

// And with a function overridden here is “this” in getName different than the “this” in getAge?

The reason this code works is that “this” is bound to the object upon which the function was called. That cannot be determined when the function is compiled, it must be determined at run-time.

Rubian block lambdas are so much better than coffescriptic arrow notation.

Also, arrows can be used for ES14 Haskelish Monads 😉

Very good post, thank you for sharing.

Yes, agree, javascript is the best bet a developer can do for the Web. Also, often developers tend to be very animate about some language features, but what really make a performance and maintainability is code design and architecture regardless of the language.

It’s not because you can hang yourself that you have to.