[air.mozilla.org video]

[slideshare.net link]

Disrupt any enterprise that requires new clothes.

— Thoreau (abridged) adjusted for Mozilla by @lawnsea.

I gave a brief talk last night at the Mozilla Research Party (first of a series), which happened to fall on the virtual (public, post-Easter-holiday) celebration of Mozilla’s 15th anniversary.

I was a last minute substitution for Andreas Gal, fellow mad scientist co-founder at Mozilla Research, so I added one slide at his expense. (This talk was cut down and updated lightly from one I gave at MSR Paris in 2011.) Thanks to Andreas for letting me use two of his facebook pics to show a sartorial pilgrim’s progress. Thanks also to Dave Herman and all the Mozilla Researchers.

Mozilla is 15. JavaScript is nearly 18. I am old. Lately I mostly just make rain and name things: Servo (now with Samsung on board) and asm.js. Doesn’t make up for not getting to name JS.

(Self-deprecating jokes aside, Dave Herman has been my naming-buddy, to good effect for Servo [MST3K lives on in our hearts, and will provide further names] and asm.js.)

Color commentary on the first set of slides:

I note that calling software an “art” (true by Knuth’s sensible definition) should not relieve us from advancing computer science, but remain skeptical that software in the large can be other than a somewhat messy, social, human activity and artifact. But I could be wrong!

RAH‘s Waldo featured pantograph-based manipulators — technology that scaled over perhaps not quite ten orders of magnitude, if I recall correctly (my father collected copies of the golden age Astounding pulps as a teenager in the late 1940s).

No waldoes operating over this scale in reality yet, but per Dijkstra, our software has been up to the challenge for decades.

I like Ken‘s quote. It is deeply true of any given source file in an evolving codebase and society of coders. I added “you could almost say that code rusts.”

Here I would like to thank my co-founder and partner in Mozilla, Mitchell Baker. Mitchell and I have balanced each other out over the years, one of us yin to the other’s yang, in ways that are hard to put in writing. I can’t prove it, but I believe that until the modern Firefox era, if either of us had bailed on Mozilla, Mozilla would not be around now.

A near-total rewrite (SpiderMonkey and NSPR were conserved) is usually a big mistake when you already have a product in market. A paradox: this mistake hurt Netscape but helped Mozilla.

I lamented the way the Design Patterns book was waved around in the early Gecko (Raptor) days. Too much abstraction can be worse than too little. We took years digging out and deCOMtaminating Gecko.

As Peter Norvig argued, design patterns are bug reports against your programming language.

Still, the big gamble paid off for Mozilla, but it took a few more years.

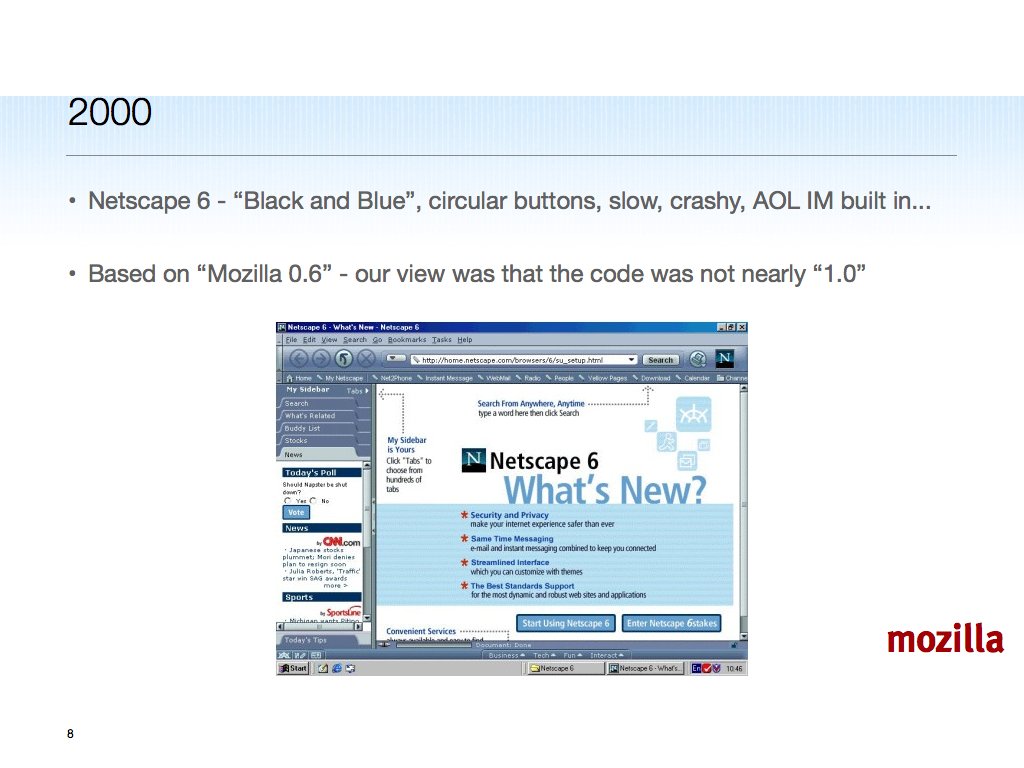

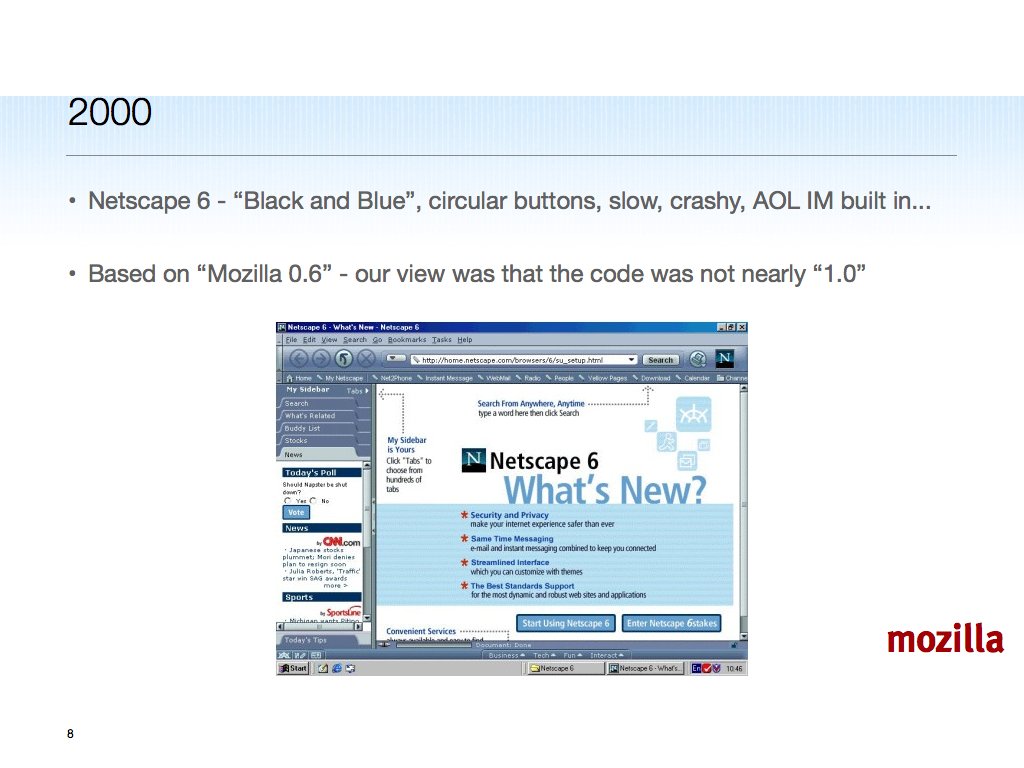

Who remembers Netscape 6? At the time some few managers with more ego than sense argued that “the team needs us to ship” as if morale would fall if we held off till Mozilla 1.0. (I think they feared that an AOL axe would fall.) The rank and file were crying “Nooo!!!!!”

AOL kept decapitating VPs of the Netscape division until morale improved.

2001: Another year, another VP beheading, but this one triggered a layoff used as a pretext to eliminate Mitchell’s position. The new VP expected no mo’ Mitchell, and was flummoxed to find that on the next week’s project community-wide conference call, there was Mitchell, wrangling lizards! Open source roles are not determined solely or necessarily by employment.

At least (at some price) we did level the playing field and manage our way through a series of rapid-release-like milestones (“the trains will run more or less on time!”) to Mozilla 1.0.

Mozilla 1.0 didn’t suck.

It was funny to start as a pure open source project, where jwz argued only those with a compiler and skill to use it should have a binary, and progress to the point where Mozilla’s “test builds” were more popular than Netscape’s product releases. An important clue, meaningful in conjunction with nascent “mozilla/browser” focus on just the browser instead of a big 90’s-style app-suite.

A lot of credit for the $2M from AOL to fund the Mozilla Foundation goes to Mitch Kapor. Thanks again, Mitch! This funding was crucial to get us to the launch-pad for Firefox 1.0.

We made a bit more money by running a Technical Advisory Board or TAB, which mostly took advice from Enterprise companies, which we proceeded to (mostly) ignore. The last TAB meeting was the biggest, and the one where Sergey Brin showed up representing Google.

Due to a back injury, Sergey stood a lot. This tended to intimidate some of the other TAB members, who were pretty clearly wondering “What’s going on? Should I stand too?” An accidental executive power move that I sometimes still employ.

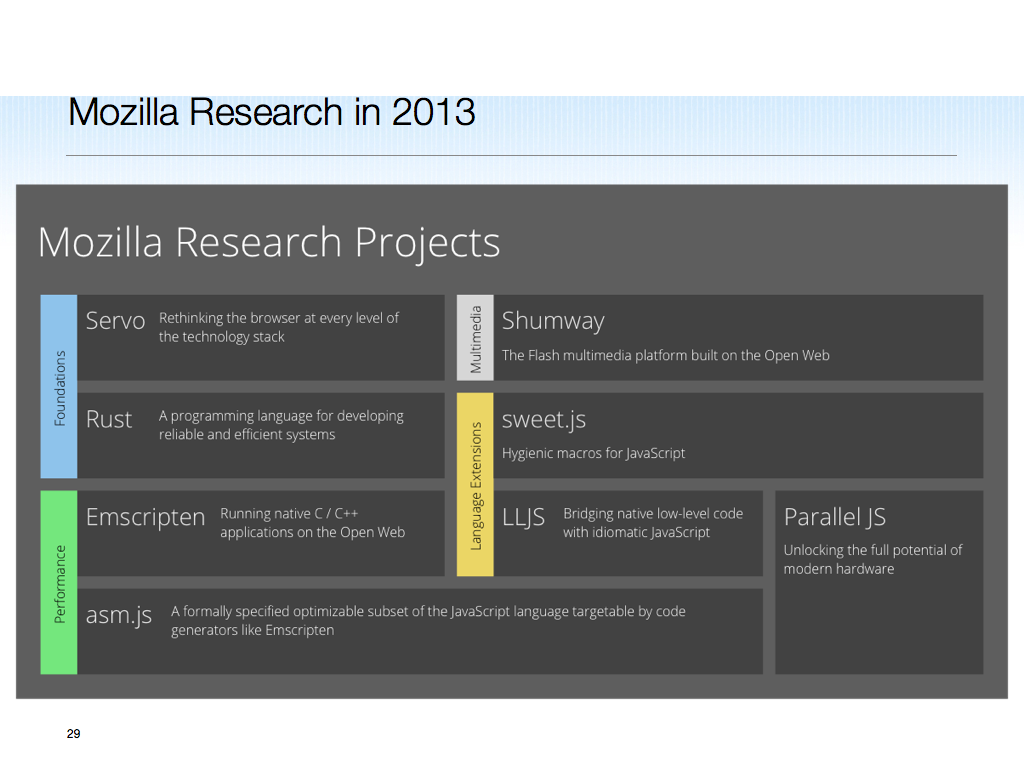

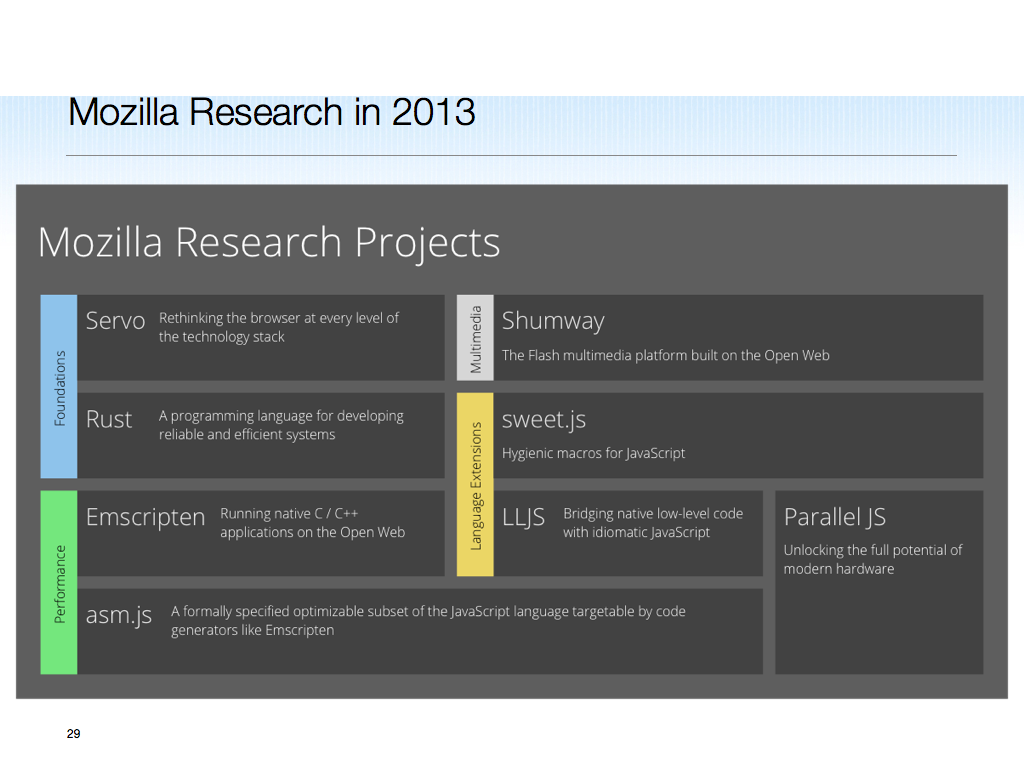

Jump to today: here is Rust beating GCC on an n-body solver. Safety, speed, and concurrency are a good way to go through college!

As you can see, Mozilla Research is of modest size, yet laser-focused on the Web, and appropriately ambitious. We have more awesome projects coming, along with lots of industrial partners and a great research internship program. Join us!

/be